Dear investors,

The invention of steam engines at the end of the 18th century initiated a period of great advancement in economic productivity that became known as the first industrial revolution. This rapid progress was interpreted at the time in two completely opposite ways: on the one hand, a utopian hope that the increase in productivity would be so great that people would be able to work less and have more free time to enjoy their lives; on the other hand, the fear that machines would replace workers and throw them into unemployment and inevitable poverty. This second interpretation even gave rise to Luddism, a movement of workers who invaded factories and destroyed machines in an attempt to prevent technological advances that, supposedly, would lead them to ruin.

Jumping two centuries ahead, today there is a somewhat similar discussion about the impact that artificial intelligence (AI) will have on the world. Enthusiasts see the potential for us to go through a new era of accelerated advancement in productivity levels and the consequent production of material wealth for the enjoyment of humanity, and others see a great threat, going to the extreme of predicting an apocalyptic future where machines controlled by superintelligent AIs they conclude that the world would be better without the human race and decide to eliminate it.

We are not experts on the subject, but it is obvious that the impact of this technology will be substantial, so it seems pertinent to us to follow the matter closely, both to seek to identify investments that could benefit from the advancement of AI and to remain alert to the potential risk that the transformation that comes ahead can represent certain business segments.

We will share our current vision, certainly incomplete and imperfect, about the possible impacts of AI, seeking to be as pragmatic and realistic as we are currently able to. Any criticisms, disagreements or additions are welcome.

What is Artificial Intelligence today?

Current AI tools are based on machine learning, a branch of computational statistics focused on developing algorithms capable of self-adjusting their parameters, through a large number of iterations, to create statistical models capable of making predictions without using a pre-programmed formula. In practice, these algorithms analyze millions of pairs of data (eg: a text describing an image and the image described) and adjust their parameters in the way that best fits this huge data set. After this calibration, the algorithm is able to analyze only one of the elements of a similar pair of data and, with that, predict the other element (eg: from a text describing an image, create an image that fits the description) .

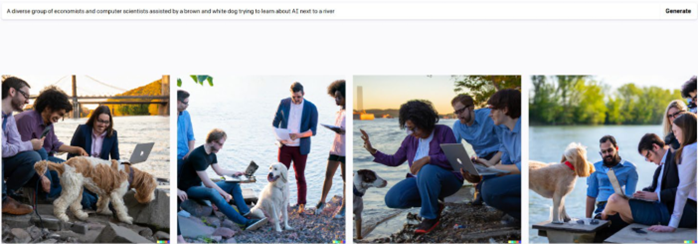

Without going into the philosophical discussion of whether this prediction mechanism based on statistical models is equivalent to human intelligence, the fact is that these models perform tasks, until then impossible for software, quickly, cheaply and better than most of the humans would perform. For example, an AI called DALL-E generated the following images from the description: “a diverse group of economists and computer scientists, accompanied by a white and brown dog, trying to learn about AI near a river.”